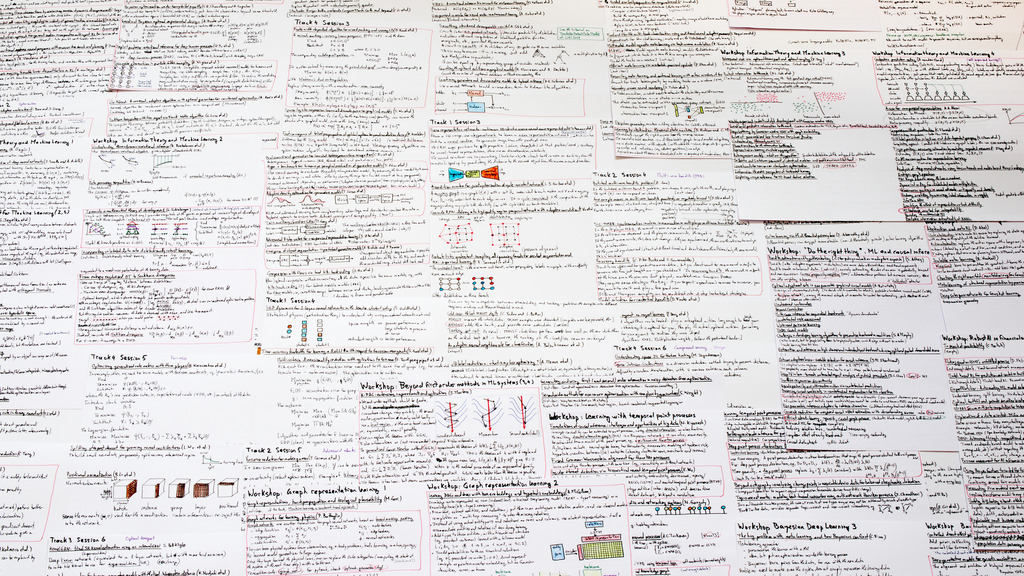

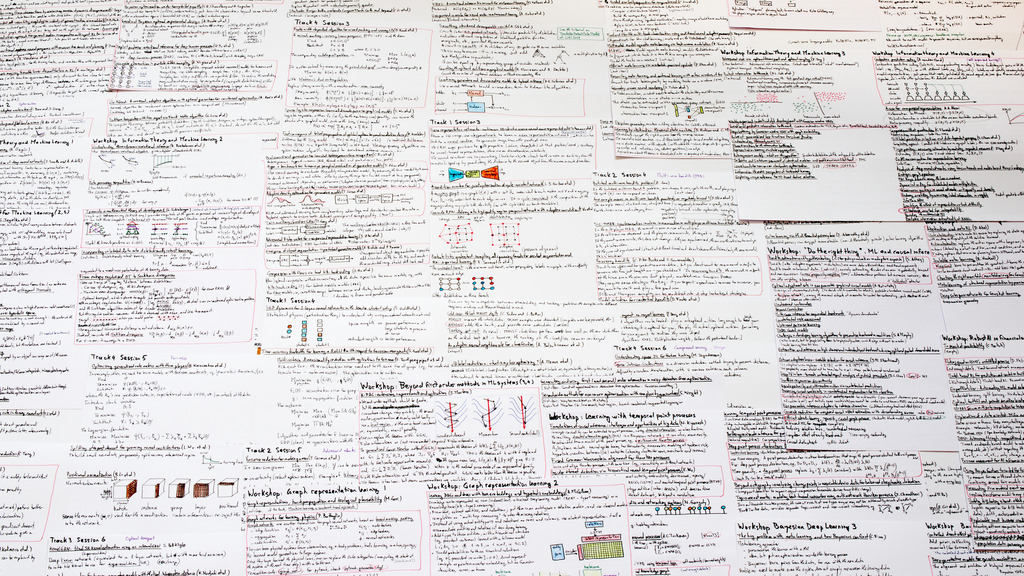

I have not attended the NeurIPS conference, but I have spent a few weeks binge-watching most of it. Here are some of the topics I have found interesting.

If you want to read/watch something funny, check the following topics: What happens if you set all the weights of a neural network to the same value? What happens if a layer becomes infinitely wide? What happens if a network becomes infinitely deep? Is it really pointless to consider deep linear networks?

If you want to look at nice pictures, check the recent explorations of the loss surface of neural nets.

If you want something easy to understand, check the discussions on fairness.

If you want something new, check the new types of layers (in particular those corresponding to combinatorial operations), sum-product networks (which are more expressive than traditional neural nets), mesure-valued derivatives (a third way of computing the gradient of an expectation), the many variants of gradient descent, second-order optimization methods, perhaps also Fourier-sparse set functions and the geometric approach to information theory.

If you want applications to finance... there were almost none. There was a workshop on "robust AI for the financial services", but the corresponding talks were either rather empty or only had a thin connection to finance.

Given the size of the conference, other people's overview of the conference may have no overlap with mine. There are some simplifications (or misunderstandings), and this is more a commented reading list than a summary.

Fairness is one of the big concerns with machine learning models applied in the real world, affecting human lives. Unfortunately, there are many, incompatible definitions of “fairness” – if you choose one, someone will be able to complain.

Most of those definitions focus on “group fairness”: first define a few “protected attributes” (gender, skin colour, age, etc.), then choose which measure should be the same across all groups – false negative rate, false positive rate, etc.

Long list of fairness measures https://aif360.readthedocs.io/en/latest/ Fairness assessment for AI in the finance industry https://arxiv.org/abs/1912.07211

“Individual fairness”, in contrast, asks that similar subjects be treated similarly – this is trickier, because we need to define what we mean by “similar subjects” and “similar treatment”.

Accounting for fairness can be done at three levels. First, we can pre-process the data, e.g., by “disentangling” the protected attributes from the rest of the data, with a vector embedding, not unlike “fader networks”. Second, we can use the fairness as a constraint or a penalty when fitting the model. Lastly, we can estimate a model without paying attention to fairness, and correct its output to make it fair.

Representation learning and fairness S. Koyejo https://slideslive.com/38923185/representation-learning-and-fairness Paradoxes in fair machine learning https://papers.nips.cc/paper/9043-paradoxes-in-fair-machine-learning

It seems that we can put almost anything inside a neural net (or, more generally, a “computation graph”), provided it is differentiable (and has informative gradients). But what about combinatorial optimization? Since the result is discrete, the function it computes is locally constant, and the gradient is zero – it is not informative.

It is actually possible to put a combinatorial optimization step inside a neural network: in the forward pass, perform the actual (discrete) optimization but, to compute a gradient in the backward pass, use a continuous relaxation of the combinatorial problem.

Differentiation of Blackbox Combinatorial Solvers M. Vlastelica et al. https://arxiv.org/abs/1912.02175

Sorting is another combinatorial operation: it can be used in a neural network using a similar idea, noticing that 1-dimensional optimal transport problems can be solved by sorting and adding an entropic regularization.

Differentiable ranking and sorting using optimal transport M. Cuturi et al. https://arxiv.org/abs/1905.11885

Many neural networks rely on weight sharing: CNN, RNN, etc. How far can we share those weights? Pushing the idea of weight sharing to its extreme, we can share all the weights, so that the neural network only has one parameter. Even worse, instead of learning the best value for that parameter, we can set it to some number chosen in advance. The only “learning” left to do is that of the structure of the network. Surprisingly, the resulting network has a decent performance and, if we keep the structure of the best network found and learn the weight (or the weights, if we decide to relax the single-weight constraint), it is even competitive with (but not better than) state-of-the-art networks, and often much smaller.

Weight-agnostic neural networks A. Gaier and D. Ha https://arxiv.org/abs/1906.04358 https://weightagnostic.github.io/

Over the years, networks are becoming deeper and deeper: how deep can they be? Could they be infinitely deep? The same layer can be repeated ad infinitum: if the weights of all those copies are shared, the network is just iterating a function, and (under a few assumptions) should converge to a fixed point of that function. That infinite number of layers can be replaced by a single layer, computing this fixed point (the gradient can be computed without iterating).

Deep equilibrium models S. Bai et al. https://arxiv.org/abs/1909.01377

We can also look at neural nets infinite in the other direction: it turns out that infinitely large neural nets (“neural tangent kernel”, NTK) correspond to Gaussian processes.

Neural Tangent Kernel: Convergence and Generalization in Neural Networks A. Jacot et al. (2018) https://arxiv.org/abs/1806.07572 Neural tangents: fast and easy infinite neural networks in Python R. Novak et al. https://openreview.net/forum?id=SklD9yrFPS The natural tangent kernel T.G.J. Rudner et al. http://timrudner.com/assets/papers/Natural_Neural_Tangent_Kernel.pdf https://slideslive.com/38922656/contributed-talk-the-natural-neural-tangent-kernel-neural-network-training-dynamics-under-natural-gradient-descent

Neural networks combine a few simple operations: linear transformations and element-wise non-linearities. Can we add more complex operations? It turns out that adding multiplications can make the network more expressive (indeed, multiplication is one of the ideas in LSTMs) and more useful: (under some assumptions) those “sum-product networks” (or “circuits”) can be used to represent probability distributions and allow the efficient computation of the partition function, marginals, etc.

Smoothing structured decomposable circuits A. Shih et al. https://arxiv.org/abs/1906.00311 Tractable probabilistic models V. van den Broeck et al. (UAI 2019) https://www.youtube.com/watch?v=NcAvJx3eEfg&list=PLbCIjDmXT4qvXXtoznO1SrKebnYkuf4su&index=2&t=0s

Once trained, artificial neural networks have their weights fixed: this is very different from biological neurons, for which the connections can be come stronger or weaker as they are used. Hebbian learning tries to mimic this behaviour: information is stored, not only in the activations, but also in the weights, which progressively change as the network is used.

How metalearning could help us accomplish our grandest AI ambitions, and early, exotic steps in that direction https://slideslive.com/38923101/how-metalearning-could-help-us-accomplish-our-grandest-ai-ambitions-and-early-exotic-steps-in-that-direction?locale=en

Optimal transport mapping input convex neural networks A.V. Makkuva https://arxiv.org/abs/1908.10962

This looks like that: deep learning for interpretable image recognition C. Chen et al. https://arxiv.org/abs/1806.10574

(In reinforcement learning or variational inference), we sometimes have to compute gradients of expectations, in which the variable wrt we want the gradient, θ, does not only appear in the integrand, but also in the probability distribution.

E[ f(x,θ) ] where x ∼ p(x,θ)

There are two well-known ways of computing this gradient: the “reparametrization trick” (write x as x = g(z,θ), where z ~ p(z) follows a distribution that no longer depends on θ), and the “reinforce” algorithm (write the expectation as in integral, differentiate under the integral sign, and write the result as ∫...p(x,θ)dx, i.e., E[...]). The first approach is best, but not always possible. The second approach has such a high variance that we need all the variance reduction tricks in the book to make it usable.

There is a third way: after differentiating under the integral sign we end up with dp(x,θ)/dθ, which can be expressed as a linear combination of two probability densities – the integral is then a difference of two expectations, which can be computed by Monte Carlo simulations.

Measure-valued derivatives for approximate Bayesian inference M. Rosca et al. http://bayesiandeeplearning.org/2019/papers/76.pdf Monte Carlo gradient estimation in machine learning S. Mohamed et al. (2019) https://arxiv.org/abs/1906.10652

Gradient descent estimates use the gradient at the current point.

SGD x[n+1] ← x[n] - α ∇f(x[n])

By analogy with the implicit and explicit schemes in the numerical solutions of ordinary differential equations, we can evaluate the gradient at the new point, x[n+1] instead of x[n].

“implicit” SGD x[n+1] ← x[n] - α ∇f(x[n+1])

We do not know that point yet, but we can approximate it

Mirror descent y[n+1] ← x[n] - α ∇f(x[n])

x[n+1] ← x[n] - α ∇f(y[n+1])One can add a regularization term (generalized projection), replace x and y with averages, and use an adaptive learning rate.

UniXGrad: a universal, adaptive algorithm with optimal guarantees for constrained optimization A. Kavis et al. https://arxiv.org/abs/1910.13857

(Approximate) proximal optimization (aProx) minimizes an approximation of the objective (e.g., linear, floored at 0), with a penalty to keep the new point close to the previous.

The importance of better models in stochastic optimization H. Asi and J Duchi https://arxiv.org/abs/1903.08619

In a neural network, we want the “information” present in the input to be preserved, but simplified and stripped of its noise. “Mutual information” can be used to measure how much information is shared, either between layers of a network, or between patches of an image (nearby patches should contain similar information).

Putting an end to end-to-end S. Löwe et al. https://arxiv.org/abs/1905.11786

Many optimization algorithms (gradient descent, Newton, etc.) are approximate Bayesian learning rules.

Deep learning with Bayesian principles M.E. Khan https://slideslive.com/38923183/deep-learning-with-bayesian-principles

Hyperparameters are often discrete choices: optimizing a discrete function f: 2^V → ℝ only known from a few samples looks like an impossible task, unless we can approximate this function in some way. One can define the Fourier transform of such a function and assume it is sparse and low-frequency (i.e., if there are interactions between the inputs, they involve few variables) – this approximation is similar to image or sound compression.

Efficiently learning Fourier sparse set functions A. Amrollahi et al. https://papers.nips.cc/paper/9648-efficiently-learning-fourier-sparse-set-functions

High-dimensional Bayesian optimization using low-dimensional feature spaces R. Moriconi et al. https://arxiv.org/abs/1902.10675

Since a composition of linear functions is still linear, a linear neural network, i.e., a neural network all of whose activation functions are the identity, is equivalent to a 1-layer network – going deeper looks pointless. But that is only in terms of expressivity: from an optimization point of view, a 1-layer linear network and a deep linear network are very different – depth provides some “implicit regularization”.

Implicit regularization in deep matrix factorization S. Arora et al. https://arxiv.org/abs/1905.13655

The activations in a neural network form 4-dimensional tensors, width×height×channel×sample, and normalizing them along some of those dimensions helps training: batch-norm, layer-norm, etc.

Positional normalization B. Li et al. https://arxiv.org/abs/1907.04312

The Lipschitz constant of a neural network controls how robust it is: it can be used to regularize the network.

Data-dependent sample complexity of deep neural networks via Lipschitz augmentation C. Wei et al. https://arxiv.org/abs/1905.03684 Estimation of the Lipschitz constant of deep neural networks (M. Fazlyab et al.) https://arxiv.org/abs/1906.04893

For nice pictures of the loss landscape of a neural net, and how regularization affects it:

https://losslandscape.com/

When training a neural network the optimization stops at a local minimum, but there are many of them. Since the loss surface for the training and the test set are slightly different, flat minima generalize better than narrow ones.

Visualizing the loss landscape of neural nets H. Li et al. https://arxiv.org/abs/1712.09913

But the loss surface need not be symmetric around those local minima: they can have a steep slope on one side, and a gentle one on another. Those minima do not generalize well, but biasing them towards the gentle slope improves generalization.

Asymmetric valleys: beyond sharp and flat local minima H. He et al. https://arxiv.org/abs/1902.00744

Gradient descent tends to zig-zag towards the optimum when the loss surface is anisotropic. In contrast, Newton's method uses the local geometry of the loss surface (the Hessian, i.e., the second derivative of the loss function) to make it (locally) isotropic.

SGD x ← x - α ∇f Newton x ← x - α H⁻¹ ∇f

The Hessian can be replaced by an approximation, easier to compute and/or invert: Adam is actually a diagonal approximation, but there are finer ones.

K-FAC: extensions, improvements and applications J. Martens https://slideslive.com/38922824/kfac-extensions-improvements-and-applications

Instead of computing the Hessian, we can train a neural network to approximate it.

Metalearning with warped gradient descent S. Flennerhag https://arxiv.org/abs/1909.00025 https://slideslive.com/38923089/contributed-talk-1-metalearning-with-warped-gradient-descent Scalable metalearning R. Hadsell

When using second-order methods (i.e., optimization methods requiring the first and second derivatives – gradient and Hessian), it is often recommended to use very large batch sizes for the Hessian.

The eigenvalues of the Hessian measure the step sizes in various directions: if the Hessian is observed with noise, the eigenvalues are not only noisy, but biased – large batch sizes reduce this bias. Random matrix theory (RMT) can help estimate and correct it.

How does mini-batching affect curvature information for second order deep learning optimization? D. Granziol et al. https://slideslive.com/38922826/spotlight-talks

The entropy of a discrete probability distribution measures how “diverse” it is, but it assumes that the possible values are as different as possible. For instance, the uniform distribution on the 3-element set {cat, kitten, plane} maximizes the entropy. Since cats and kittens are very close, and very different from planes, a maximally “diverse” distribution should give more than 1/3 of the probability mass to planes, and less than 2/3 to cats and kittens.

It is possible to generalize the notion of entropy to account for the “geometry” of the underlying space.

GAIT: a geometric approach to information theory J. Gallego et al. https://arxiv.org/abs/1906.08325

The total correlation generalizes mutual information.

I(X;Y) = H(X,Y) - H(X) - H(Y) TC(X1,...,Xn) = H(X1,...,Xn) - H(X1) - ... - H(Xn)

(It can also be defined, like the mutual information, as the KL divergence between the joint distribution of the X[i]'s and the product of marginals.)

It can be used to measure if a set of variables are independent (or conditionally independent).

To estimate a large correlation matrix from a small number of observations, assume that the observed variables X depend on a small number of latent variables Z, with each observed variable depending on at most one latent variable. Those independence conditions can be expressed with the total correlation and the model Z = WX + noise can be estimated by minimizing TC(X|Z) + TC(Z) + λ∑TC(Z|Xi).

In finance, with stock returns, we recover industry classifications.

Fast structure learning with modular regularization G. VerSteeg et al. https://arxiv.org/abs/1706.03353 https://github.com/hrayrhar/T-CorEx https://github.com/gregversteeg/LinearCorex

PCA (to find outliers) can be regularized with quantum (von Neumann) entropy.

H(U) = - Trace( U log U ) where U≽0

(As expected with “quantum” notions, it is sometimes counter-intuitive: for instance, it does not always increase when you expect it to.)

Quantum entropy scoring for fast robust mean estimation and improved outlier detection Y. Dang et al. https://arxiv.org/abs/1906.11366

There are many ways of defining a notion of “distance” between probability distributions: for instance, integral probability metrics (IPM) look at their difference, while divergences look at their quotient.

IPM(p,q) = Sup_g | E_p[g] - E_q[g] | where g∈H (e.g., a ball in a RKHS) D(p,q) = ∫ q ϕ(p/q)

It is possible to use those notions to design tests to compare distributions (but the distribution of the test statistic under the null hypothesis may be tricky to derive).

Interpretable comparison of distributions and models A. Gretton et al. https://slideslive.com/38923184/interpretable-comparison-of-distributions-and-models

From entropy-regularized optimal transport to Sinkhorn divergences A. Genevay https://slideslive.com/38922976/sinkhorn-divergences-bridging-the-gap-between-optimal-transport-and-mmd

Graph-based discriminators: sample complexity and expressiveness R. Livni and Y. Mansour https://arxiv.org/abs/1906.00264

While our genome is fixed, which genes are expressed depends on the type of cell and changes over time, for instance during embryogenesis, or when a disease progresses.

“Single-cell RNA sequencing” (scRNAseq) can identify which genes are expressed in individual cells, and gives snapshots of their evolution. (Unbalanced) optimal transport can link those snapshots.

Optimal transport methodology for lineage tracing K. Yang https://slideslive.com/38922964/scalable-unbalanced-optimal-transport-using-generative-adversarial-networks Towards a mathematical theory of development G. Schiebinger https://slideslive.com/38922972/analyzing-developmental-processes-with-optimal-transport

A pre-trained convolutional neural net can reduce an image to a grid of features. The style of an image can be defined as the empirical distribution of those features, forgetting their location. To perform style transfer, one can learn an optimal transport (Monge) map from the style of an image to that of another image.

Wasserstein style transfer Y. Mroueh https://arxiv.org/abs/1905.12828 https://wasserstein-transfer.github.io/

Word embeddings from different languages can often be aligned, either by a rotation or, more generally, with optimal transport.

Generalizing learning with optimal transport S. Jegelka et al. https://slideslive.com/38922971/generalizing-learning-with-optimal-transport-invariances-and-generative-models-across-incomparable-spaces

Word embeddings (or embeddings of other objects) often use Euclidean space, in which representing hierarchies is tricky – as you move farther away from the root, there are more and more branches, and there is not enough room to keep them as separate as they were close to the root. Hyperbolic spaces do not have that problem.

Unsupervised hierarchy matching with optimal transport over hyperbolic space D. Alvarez-Melis et al. https://arxiv.org/abs/1911.02536 Numerically accurate hyperbolic embeddings using tiling-based models T. Yu and C. de Sa https://papers.nips.cc/paper/8476-numerically-accurate-hyperbolic-embeddings-using-tiling-based-models

We can put anything in a neural net, provided it is differentiable: this includes physics engines and renderers (computer graphics), which can be part of a computer vision system.

Perception as generative reasoning: structure, causality probability Perception and action from generative models of physics K. Smith https://slideslive.com/38923115/perception-and-action-from-generative-models-of-physics Scene representation neworks: continuous 3D structure aware neural scene representation V. Sitzmann et al. https://arxiv.org/abs/1906.01618 https://slideslive.com/38922305/scene-representation-networks-continuous-3dstructureaware-neural-scene-representations

Some computer vision systems try to recognize the elements of a 3-dimensional scene from an image, by describing it using simple geometric shapes, such as boxes or ellipsoids. Instead, they could use convex shapes, each defined as an intersection of half-spaces.

CvxNet: learnable convex decomposition B. Deng et al. https://arxiv.org/abs/1909.05736

Instead of directly estimating the density function of the data, one can estimate the score function (the derivative of the log-density).

Generative modeling by estimating gradients of the data distribution Y. Song and S. Ermon https://arxiv.org/abs/1907.05600 https://slideslive.com/38922607/generative-modeling-by-estimating-gradients-of-the-data-distribution

To complete a large matrix, e.g., user×item, for a recommendation system, or (S,V)×O, for a knowledge base storing (S,V,O) triples, one can replace the rows and columns with vector embeddings; this has the added advantage of allowing for new values.

Learning DAGs and trees with box embeddings and hyperbolic embeddings A. McCallum https://slideslive.com/38923993/learning-dags-and-trees-with-box-embeddings-and-hyperbolic-embeddings

Random projections, e.g., by multiplying with a sparse matrix, are a surprisingly good way of reducing dimension.

Understanding sparse JL for feature hashing M. Jagadeesan https://arxiv.org/abs/1903.03605 https://slideslive.com/38924029/understanding-sparse-jl-for-feature-hashing

PCA is not fair: if the population is divided into groups, it minimizes the (weighted) sum of the reconstruction errors for the groups, but those group-level reconstruction errors can be very different. Try other aggregation functions, e.g., replace the sum with a maximum – it will then minimize the reconstruction error for the worst-performing group.

Multicriteria dimensionality reduction with applications to fairness T. Tantipongpipat et al. https://papers.nips.cc/paper/9652-multi-criteria-dimensionality-reduction-with-applications-to-fairness

Infra-slow brain dynamics as a marker of cognitive function and decline S. Ajmera https://papers.nips.cc/paper/8918-infra-slow-brain-dynamics-as-a-marker-for-cognitive-function-and-decline

Randomized smoothing transforms a classifier to retain the most likely class in a neighbourhood of the query; this makes the classifier robust to random (non-adversarial) perturbations.

Provably robust deep learning via adversarially trained smoothed classifiers H. Salman et al. https://arxiv.org/abs/1906.04584

A stochastic classifier can be turned into a deterministic one by replacing randomness with arbitrariness – this is similar to (Floyd–Steinberg) dithering for images.

On making stochastic classifiers deterministic A. Cotter et al. https://papers.nips.cc/paper/9273-on-making-stochastic-classifiers-deterministic

Label smoothing replaces hard labels (e.g., “100% cat”) with soft ones (“90% cat”, “10% dog”).

When does label smoothing help? R. Müller et al. https://arxiv.org/abs/1906.02629

Instrumental variables (a staple of econometrics) are used to infer causality using linear models. Those linear regressions can be replaced by kernel regressions or (if there is enough data) deep neural nets.

Kernel instrumental variable regression R. Singh et al. https://arxiv.org/abs/1906.00232 EconML: a machine learning library for estimating heterogeneous treatment effects https://github.com/microsoft/EconML

To estimate the effects of an intervention from observational data, match each treated unit, not with a single control, but with a weighted average of controls.

Synthetic control A. Abadie et al. https://slideslive.com/38922869/synthetic-control

Foundations of causal inference: challenges and opportunities of big data N. Kiyavash https://slideslive.com/38922882/invited-talk-foundations-of-causal-inference Temporal point processes: web, social media, and networking systems N. Ganguly https://slideslive.com/38922886/invited-talk-web-social-media-and-networking-systems?locale=en The graph Hawkes network for reasoning on temporal knowledge graphs Z. Han et al. https://slideslive.com/38922891/the-graph-hawkes-network-for-reasoning-on-temporal-knowledge-graphs

Causal confusion in imitation learning P. deHaan et al. https://slideslive.com/38922949/causal-confusion-in-imitation-learning?locale=en https://arxiv.org/abs/1905.11979

Data collected by a bandit algorithm are unbalanced (we collect more data for the actions we believe are better) and leads to biased estimates of the value of each action. Inverse propensity weighting corrects this bias but increases variance. Other weighting schemes can help reduce the variance.

Bandits, estimation and hypothesis testing S. Athey https://slideslive.com/38923707/confidence-intervals-for-policy-evaluation-in-adaptive-experiments

Batched multi-arm bandits Z. Gao https://slideslive.com/38923272/batched-multiarmed-bandits-problem https://arxiv.org/abs/1904.01763 SIC-MMAB: synchronization involves communication in multi-player multi-arm bandits E. Boursier https://arxiv.org/abs/1809.08151 Recovering bandits C. Pike-Burke and S. Grunewalder https://arxiv.org/abs/1910.14354

The “successor representation” is the expected discounted future state occupancy (or features).

A neurally plausible model learns successor representation in partially observable environments E. Vértez and M. Sahani https://arxiv.org/abs/1906.09480 Better transfer learning with inferred successor maps T. Madarasz and T. Behrens https://arxiv.org/abs/1906.07663

In reinforcement learning, the value of an action depends on the resulting reward, which is often delayed. Instead of using the time elapsed since the action was taken to estimate how important the action was for that reward, use P(a|x,f), where a is the past action, x the past state and f the future outcome (present state).

Hindsight credit assignment A. Harutyunyan et al. https://arxiv.org/abs/1912.02503

Reinforcement learning systems learn faster if we enlarge the action space with “subgoals”.

Abstraction and meta-RL D. Abel https://slideslive.com/38923092/abstraction-and-metareinforcement-learning

In Q-learning, replacing the state-action value function Q with log(Q) allows for lower discount factors.

Using a logarithmic mapping to enable lower discount factors in reinforcement learning H. van Seijen et al. https://arxiv.org/abs/1906.00572

Reinforcement learning systems can generate mathematical proofs, at least is some very specific domains: for instance, given a polynomial P on n variables, they can prove or disprove ∀x∈[0,1]ⁿ P(x)≥0.

Learning dynamic polynomial proofs A. Fawzi et al. https://arxiv.org/abs/1906.01681

(Meta-learning is an important, trendy, but difficult topic.)

Temporal logic is the extension of propositional logic with a few temporal operators, “eventually”, “always” and “until”. It can be used to study the behaviour of multi-agent systems, for instance, how several automatic trading systems interact, to prove there will be no “flash crash”.

Unfortunately, this approach does not scale well: for real-world systems, one can instead use simulations of an agent-based system, calibrated on real data.

Understanding equilibria in multiagent systems M. Woolridge https://slideslive.com/38922280/invited-talk-3-understanding-equilibrium-properties-of-multiagent-systems

Temporal logic can also be used to define constraints or penalties when modeling point processes (e.g., “event A should occur before event B”).

Temporal logic point processes S. Li et al. https://slideslive.com/38922890/temporal-logic-point-processes

TVO is a refinement of evidence lower bound (ELBO) used in variational inference (VI).

Understanding thermodynamic variational inference R. Brekelmans et al. https://slideslive.com/38922752/contributed-talk-understanding-thermodynamic-variational-inference

Destructive learning identifies patterns in the data, removes them, and starts again with the residuals, until there is no identifiable pattern left.

Deep point process destructors D. Inouye https://slideslive.com/38922857/deep-point-process-destructors

After modeling the preferences of each stakeholder for a certain type of decision, we no longer need the actual stakeholders: we can directly use the models to vote in their stead.

This could be useful when the same type of decision, on slightly different data, has to be taken on a regular basis (e.g., daily), or when the decision has to be taken immediately.

Putting ethical AI to the vote A. Procaccia https://slideslive.com/38922282/invited-talk-4-putting-ethical-ai-to-the-vote

posted at: 14:30 | path: /ML | permanent link to this entry