Rather than a review of 2019 in machine learning, this is a review of (some of) what I read this year: this is biased towards my interests, incomplete, and some of the topics may already be a few years old.

1a. The main transformation in the machine learning landscape was undoubtedly the rise of language models: they are not only more powerful, trained on more data, they are also more directly usable, thanks to the HuggingFace transformers library.

https://huggingface.co/transformers/index.html

It is difficult, however, to keep track of which model is currently the best. When you read this, it may no longer be one of the following: BERT and its many variants (Albert (lightweight), RoBERTa (Facebook), DistilBERT, CamemBERT (French), Ernie (Baidu, Chinese), etc.), XLNet, XLM, GPT-2, etc.).

1b. Those models provide contextual word embeddings – in contrast to word2vec, GloVe, FastText, which are not contextual.

Visualizing and measuring the geometry of BERT https://arxiv.org/abs/1906.02715 Contextual string embeddings for sequence labelings https://pdfs.semanticscholar.org/421f/c2556836a6b441de806d7b393a35b6eaea58.pdf

1c. There has also been some work on multilingual models, in particular multilingual embeddings: for languages with less training data, one can simply learn a single model for several (related) languages.

Image search using multilingual texts: a cross-modal learning approach between image and text https://arxiv.org/abs/1903.11299 https://github.com/facebookresearch/MUSE

1d. Some (traditional) word embeddings can account for polysemy.

Linear algebraic structure of word senses with applications to polysemy https://arxiv.org/abs/1601.03764 Multimodal word distributions https://arxiv.org/abs/1704.08424

2. "Self-supervised learning" is a special case of unsupervised learning (or a first, pretraining, step in semi-supervised learning, or pre-training for supervised learning) in which we turn the unlabeled data into a supervised problem. For instance, given unlabeled images, we can train a model to tell if two patches come from the same image. Given sound data, we can train a model to tell if two patches are close in time or not – the features learned will identify phonemes, if we want very close patches, or speakers, for slightly more distant ones.

MixMatch: a holistic approach to semi-supervised learning https://arxiv.org/abs/1905.02249

3. Adversarial training (that is a new deep learning word for the more classical notion of "robust optimization") tries to make neural networks (slightly) more robust to adversarial attacks.

Provably Robust Deep Learning via Adversarially Trained Smoothed Classifiers https://arxiv.org/abs/1906.04584 Adversarial Examples Are Not Bugs, They Are Features https://arxiv.org/abs/1905.02175

4. We have a better understanding of the loss landscape of deep neural networks:

- The set of global minima is connected

- Wide extrema generalize better

- Stochastic gradient descent (SGD) tends to turn around one of those minima: average the model weights rather than the forecasts

- Different SGD runs will turn around different minima: do not average the weights but the forecasts

The following website shows very nice pictures of those landscapes.

https://losslandscape.com/

5a. Pure stochastic gradient descent (SGD) is not the only way to train a neural network. The gradient descent step is very similar to the Euler discretization of an ODE: many SGD variants can be interpreted as other ODE discretizations.

A differential equation for modeling Nesterov's accelerated gradient method: theory and insight https://arxiv.org/abs/1503.01243 Neural Tangent Kernel: Convergence and Generalization in Neural Networks https://arxiv.org/abs/1806.07572

5b. Since the successive estimates of the weights of a network form a sequence, we can use all the sequence-related tricks we learned in elementary calculus: Cesàro summation, convergence acceleration, etc.

Bridging the gap between constant step size stochastic gradient descent and Markov chains https://arxiv.org/abs/1707.06386 Constant-step-size least-mean-square and optimal sampling distributions https://arxiv.org/abs/1412.0156

5c. Not all training examples are equally relevant: importance sampling gives more weight to the most informative samples, identified by the norm of their gradient.

Not all samples are created equal: deep learning with importance sampling https://arxiv.org/abs/1803.00942

5d. With the rise of (clusters of) GPUs, distributed training can speed up computations but, because of the communication bandwidth required, the gains may not be as large as one would expect.

Byzantine-tolerant machine learning https://arxiv.org/abs/1703.02757 Deep gradient compression: reducing the communication bandwidth for distributed training https://arxiv.org/abs/1712.01887

5e. There are also alternatives to back-propagation, for instance by learning to forecast the gradients instead of actually computing them, or by training the layers separately, using auxiliary objectives.

Decoupled neural interfaces using synthetic gradients https://arxiv.org/abs/1608.05343 Putting An End to End-to-End: Gradient-Isolated Learning of Representations https://papers.nips.cc/paper/8568-putting-an-end-to-end-to-end-gradient-isolated-learning-of-representations

6a. Monte Carlo simulations usually rely on Metropolis-Hastings, Gibbs sampling, or Hamiltonian Monte Carlo (HMC), but these are not the only choices. Langevin Monte Carlo is very similar to gradient descent: move in the direction of the gradient of the log posterior probability, but add noise.

Langevin diffusions and the Metropolis-adjusted Langevin algorithm https://arxiv.org/abs/1309.2983 Riemann manifold Langevin Hamiltonian Monte Carlo https://pdfs.semanticscholar.org/16c5/06c5bb253f7528ddcc80c72673fabf584f32.pdf

6b. Most Monte Carlo simulation methods are reversible, but that is not actually needed: non-reversible alternatives may converge faster.

Stochastic bouncy particle sampler https://arxiv.org/abs/1609.00770 The bouncy particle sampler: a nonreversible rejection-free Markov chain Monte Carlo method https://arxiv.org/abs/1510.02451 Non-reversible Metropolis-Hastings https://arxiv.org/abs/1401.8087 Irreversible Monte Carlo algorithms for efficient sampling https://arxiv.org/abs/0809.0916 Liftings of tree-structured Markov chains https://people.eecs.berkeley.edu/~sinclair/liftings.pdf The zig-zag process and super-efficient sampling for Bayesian analysis of big data https://arxiv.org/abs/1607.03188 A piecewise deterministic scaling limit of lifted Metropolis-Hastings in the Curie-Weiss model https://arxiv.org/abs/1509.00302

7. Graph data is becoming easier to use.

7a. A simple way to use graph data is to use a graph kernel, i.e., a way of defining how close two nodes are.

Kernels and regularization on graphs https://people.cs.uchicago.edu/~risi/papers/SmolaKondor.pdf Weisfeiler-Lehman graph kernels http://www.jmlr.org/papers/volume12/shervashidze11a/shervashidze11a.pdf A persistent Weisfeiler-Lehman procedure for graph classification http://proceedings.mlr.press/v97/rieck19a/rieck19a.pdf

Another way is to embed the nodes in a Euclidean space, for instance using word2vec on random walks on the graph.

DeepWalk: online learning of social representations https://arxiv.org/abs/1403.6652 node2vec: scalable feature learning for networks https://arxiv.org/abs/1607.00653 Anonymous walk embeddings https://arxiv.org/abs/1805.11921 Structural deep network embedding https://www.kdd.org/kdd2016/papers/files/rfp0191-wangAemb.pdf Link prediction via subgraph embedding-based convex matrix completion http://gerard.demelo.org/papers/link-prediction-subgraphembeddings.pdf GraRep: learning graph representations with global structural information https://paperswithcode.com/paper/grarep-learning-graph-representations-with DeepTrax: embedding graphs of financial transactions https://arxiv.org/abs/1907.07225 Holographic embeddings of knowledge graphs https://arxiv.org/abs/1510.04935

Many calculus notions (derivatives, Fourier analysis, etc.) can be discretized using a grid, which is a special type of graph. It turns out that those notions can be generalized to arbitrary graphs: signal processing on graphs.

Multi-dimensional graph Fourier transform https://arxiv.org/abs/1712.07811 Calculus on graphs https://arxiv.org/abs/cs/0408028

In particular, the notion of convolution can be generalized from grids to graphs.

CayleyNets: graph convolutional neural networks with complex rational spectral filters https://arxiv.org/abs/1705.07664 Link prediction based on graph neural networks https://arxiv.org/abs/1802.09691 Modeling relational data with graph convolutional networks https://arxiv.org/abs/1703.06103 Graph convolutional neural networks for web-scale recommender systems https://arxiv.org/abs/1806.01973 Convolutional neural networks on graphs with fast localized spectral filtering https://arxiv.org/abs/1606.09375 Graph2Seq: graph to sequence learning with attention-based neural networks https://arxiv.org/abs/1804.00823

GANs are still everywhere and can be used to generate random graphs, hopefully more realistic than the classical models (Erdős–Rényi, Barabási–Albert (preferential attachment), Watts–Strogatz (rewired ring lattice), exponential random graph, stochastic block model).

Learning deep generative models of graphs https://arxiv.org/abs/1803.03324

8a. Reinforcement learning is still a very hot topic, but it remains highly technical: contrary to mainstream machine learning algorithms and neural networks, very few of us actually use it.

Here is some learning material:

Algorithms for reinforcement learning (2009) https://sites.ualberta.ca/~szepesva/papers/RLAlgsInMDPs.pdf Stanford course https://www.youtube.com/playlist?list=PLoROMvodv4rOSOPzutgyCTapiGlY2Nd8u Berkeley course https://www.youtube.com/playlist?list=PLkFD6_40KJIwhWJpGazJ9VSj9CFMkb79A

8b. There are two main concerns with many of the recent reinforcement learning "successes":

- They are not sample-efficient – they require an insane amount of training, equivalent to 1000 or 10,000 years of human time;

- They focus on a single, very specific task, and cannot easily generalize to other tasks.

Meta-learning tries to respond to those challenges, by training a network for learn new tasks from just a few samples (few-shot learning).

Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks https://arxiv.org/abs/1703.03400 How to train your MAML https://arxiv.org/abs/1810.09502 RL²: Fast Reinforcement Learning via Slow Reinforcement Learning https://arxiv.org/abs/1611.02779

Here is a simple meta-learning benchmark, which can be used as an intelligence test (for computers):

On the Measure of Intelligence https://arxiv.org/abs/1911.01547

8c. Bayesian methods still have their role to play in the low-data regime.

9. Variational inference is another of those technical topics. The basic idea is simple: we want to compute a probability distribution p, usually the posterior from some Bayesian model, but it is intractable. Instead, we will try to find a simpler distribution q, tractable, but close to p. To measure closeness, we use the KL divergence KL(q‖p) – even if p is intractable, KL(q‖p) may be easier to compute.

For instance, we can search for q as independent Gaussians ("mean field approximation"). Since this completely forgets the dependence structure in p, it looks like a bad idea – but even if the variational distribution q is far from the target p, most of what we want to do with it, in particular, computing expectations, will give similar results.

Unfortunately, this requires ad hoc computations, specific to each model.

A tutorial on variational Bayesian inference http://www.orchid.ac.uk/eprints/40/1/fox_vbtut.pdf Automatic differentiation variational inference https://arxiv.org/abs/1603.00788

10. Geometry

10a. The Kullback-Leibler (KL) divergence is often used to compute the "distance" between distributions. For instance, minimizing the log-likelihood of a model is equivalent to minimizing the KL divergence between the data and the model. But it is often insufficient, because it ignores the geometry of the underlying space: if two distributions have disjoint support, they will be maximally distant for the KL divergence, regardless of the distance between their supports.

The Wasserstein distance (contrary to the KL divergence, it is actually a distance) addresses those problems but, unfortunately, it it difficult to compute. The Sinkhorn distance is a regularization of the Wasserstein distance. The sliced Wasserstein distance computes the Wasserstein distance after an random projection on a 1-dimensional subspace (where it is easy to compute) and averages several of those distances.

Sinkhorn distances: lightspeed computation of optimal transportation distances https://arxiv.org/abs/1306.0895 Fast computation of Wasserstein barycenters https://arxiv.org/abs/1310.4375 Sliced Gromov-Wasserstein https://arxiv.org/abs/1905.10124 Large-scale optimal transport and mapping estimation https://arxiv.org/abs/1711.02283

The Wasserstein distance is also used in GANs: even if the discriminator is good, and contrary to the KL divergence, the gradient of the Wasserstein distance tells the generator in which direction to go.

Improved training of Wasserstein GANs https://arxiv.org/abs/1704.00028

10b. Topological data analysis (TDA) remains an underground topic, in spite of the existence of the "TDA" R package which hides all the complexity from the end user. It has been applied to study the complexity of neural networks, and to regularize them.

On characterizing the capacity of neural networks using algebraic topology https://arxiv.org/abs/1802.04443 Neural persistence: a complexity measure for deep neural networks using algebraic topology https://arxiv.org/abs/1812.09764 Connectivity-optimized representation learning via persistent homology https://arxiv.org/abs/1906.09003 A topological regularizer for classifiers via persistent homology https://arxiv.org/abs/1806.10714 An introduction to topological data analysis: fundamental and practical aspects for data scientists https://arxiv.org/abs/1710.04019

10c. Other bits of algebraic topology find applications in statistics.

Statistical inference using the Morse-Smale complex https://arxiv.org/abs/1506.08826 Morse-Smale regression https://www.ncbi.nlm.nih.gov/pubmed/23687424 Visual exploration of high-dimensional scalar functions http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.226.3032&rep=rep1&type=pdf Data analysis with the Morse-Smale complex: the msr package for R https://www.jstatsoft.org/article/view/v050i02/v50i02.pdf

10d. Deep learning uses a lot of linear transformations and Euclidean distances but, for some types of data, Euclidean space is not the best choice. For instance, trees are difficult to embed in flat spaces: if you start from the root, add three children to it, add three children to each of them, and repeat a few times, the nodes become closer and closer. Hyperbolic spaces do not have that problem and are therefore a good choice to compute embeddings of hierarchies, trees or more general graphs.

On the tree-likeness of hyperbolic spaces https://arxiv.org/abs/1105.3925 Hyperbolic deep learning for Chinese natural language understanding https://arxiv.org/abs/1812.10408 Hyperbolic neural networks https://arxiv.org/abs/1805.09112 Hyperbolic attention networks https://arxiv.org/abs/1805.09786 Representation trade-offs for hyperbolic embeddings https://arxiv.org/abs/1804.03329 Reconstructing approximate tree metrics http://www.cs.yale.edu/homes/mahesh/papers/approx-tree.pdf Learning continuous hierarchies in the Lorentz model of hyperbolic geometry https://arxiv.org/abs/1806.03417

10e. When training a neural network, we often consider the space in which the weights live as a vector space. But points in that space represent models of the data, and the Euclidean distance between those points does not correspond to a useful notion of distance between those models. It is already visible with a simple model, univariate Gaussians. There are two parameters, μ and σ (or μ and σ², or μ and log σ, or anything you want – the fact that there are several meaningful reparametrizations leading to different Euclidean distances is already troubling). We are tempted to say that the distance between N(0,1) and N(1,1) is 1, and so it the distance between N(0,σ²) and N(1,σ²), regardless of the variance. But when the variance is low, N(0,σ²) and N(1,σ²) are very easy to distinguish, while if the variance is high, they are almost indistinguishable: to reflect this, we need something else than the Euclidean distance.

It is possible to define a "metric" (a Riemannian structure) using the Fisher information matrix that behaves as we expect: the distance between N(0,σ²) and N(1,σ²) decreases as σ increases.

The same idea applies to neural networks, and has applications to their training: the Riemannian structure defines a more geometric gradient, called the "natural gradient", which speeds up convergence.

Information-geometric optimization algorithms: a unifying picture via invariance principles https://arxiv.org/abs/1106.3708 The Riemannian geometry of deep generative models https://arxiv.org/abs/1711.08014 Limitations of the empirical Fisher approximation https://arxiv.org/abs/1905.12558

11a. Interpretable machine learning

https://christophm.github.io/interpretable-ml-book/

11b. Researchers are more and more concerned by ethical issues, for instance, that of "fairness". Unfortunately, there are several legitimate, but incompatible notions of "fairness" (statistical parity, equal opportunities, disparate impact, average odds, etc.).

Long list of fairness measures and mitigation algorithms (pre-, in- and post-processing) https://github.com/IBM/AIF360

While you should worry about those topics, and even assess the fairness of your models, you should leave the choice of which definition of "fairness" to use and of the trade-off between fairness and performance to someone else (stakeholders, legal department, management, etc.) – otherwise, your choices will be challenged.

12. Other topics

12a. New layer types

Lattice layers can enforce monotonicity constraints

Deep lattice networks and partial monotonic functions https://arxiv.org/abs/1709.06680 Monotonic calibrated interpolated look-up tables https://arxiv.org/abs/1505.06378 Lattice regression https://papers.nips.cc/paper/3694-lattice-regression.pdf Fast and flexible monotonic functions with ensembles of lattices https://papers.nips.cc/paper/6377-fast-and-flexible-monotonic-functions-with-ensembles-of-lattices

Constrained optimization layer

Differentiable Convex Optimization Layers http://web.stanford.edu/~boyd/papers/pdf/diff_cvxpy.pdf https://github.com/cvxgrp/cvxpylayers

Variance layers

Variance networks: when expectation does not meet your expectations https://arxiv.org/abs/1803.03764

12b. Data structures, in computer science (think of hash tables), are usually designed to provide the correct answer. For a few years, probabilistic data structures, which return the correct answer with high probability, have risen in popularity (for instance, Bloom filters). Those probabilistic data structures can now be learned from data.

The case for learned index structures https://arxiv.org/abs/1712.01208

12c. Tensor decompositions

Tensor networks https://tensornetwork.org/

12d. RKHS

A primer on reproducing kernel Hilbert spaces https://arxiv.org/abs/1408.0952

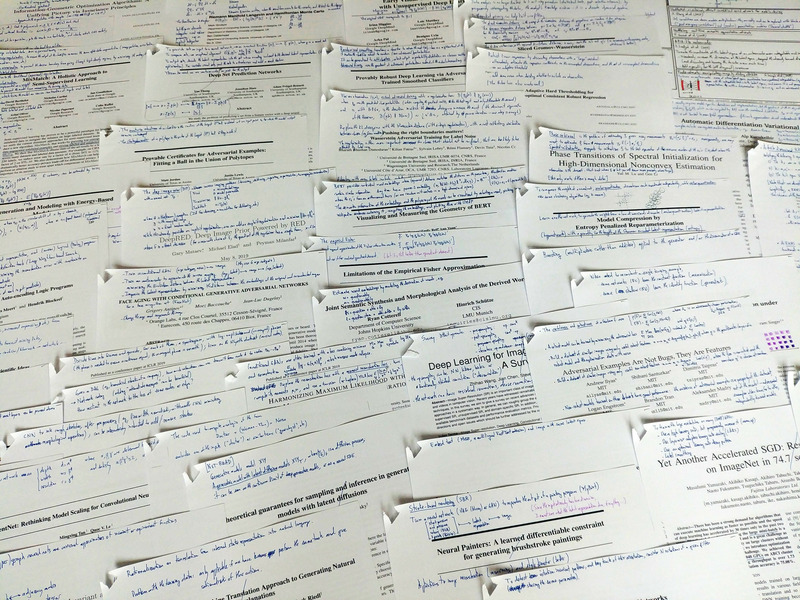

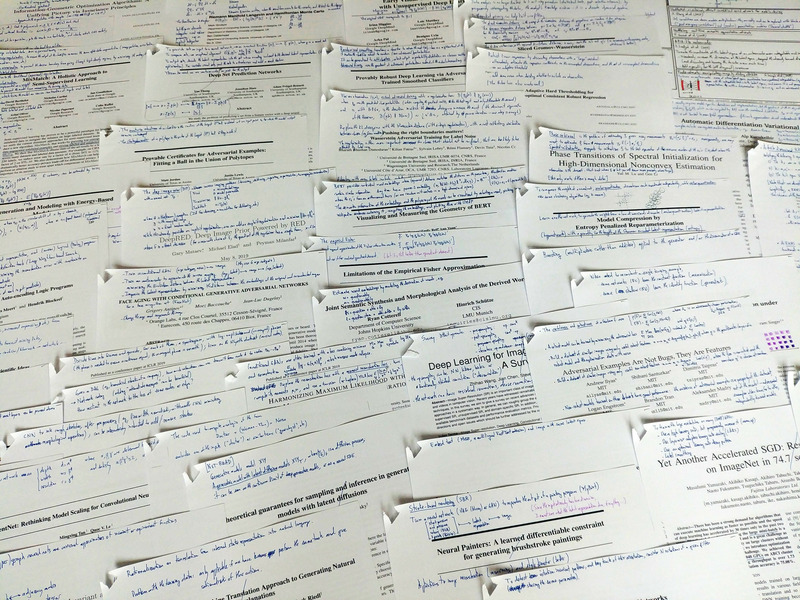

My summary of those papers (and many more, in reverse chronological order of reading time) is here:

http://zoonek.free.fr/Ecrits/articles.pdf

posted at: 11:36 | path: /R | permanent link to this entry